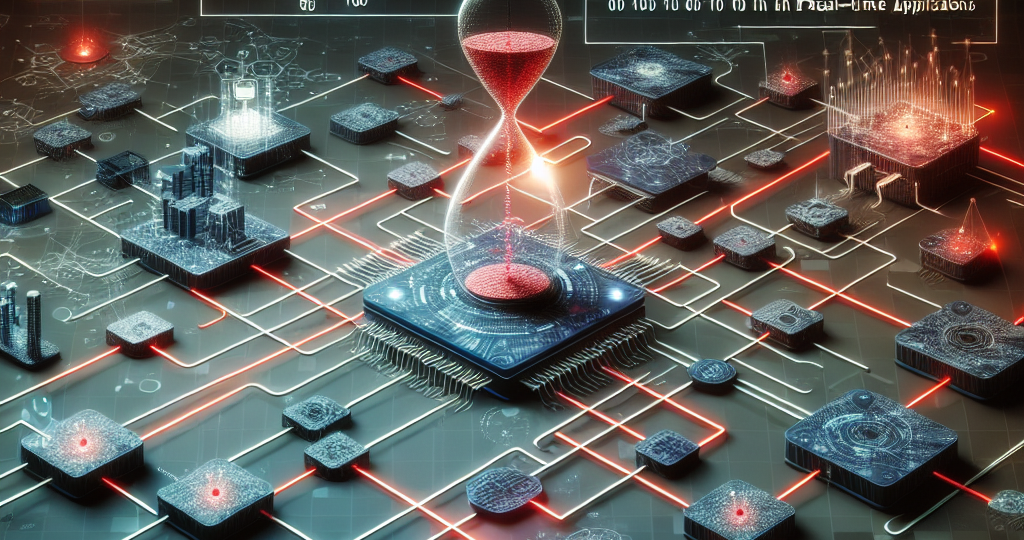

Understanding Network Latency in Real-Time Applications

September 12, 2024 | by Willie Brockington

Network latency in real-time applications is a crucial factor that can make or break the user experience. It refers to the delay in data transmission over a network, impacting how quickly information travels from the sender to the receiver. Understanding network latency is essential in ensuring smooth and seamless communication in real-time applications like video conferencing, online gaming, and live streaming. By minimizing latency, developers can improve responsiveness and overall performance, leading to a more satisfying user experience. In this article, we will explore the impact of network latency on real-time applications and discuss strategies to reduce latency for optimal performance.

The Fundamentals of Network Latency

Definition of network latency

Network latency refers to the delay that occurs when data packets travel from the source to the destination in a network. It is essentially the time it takes for data to be transmitted from one point to another. Latency is typically measured in milliseconds and can significantly impact the performance of real-time applications.

Factors influencing network latency

- Distance: The physical distance between the source and destination can affect latency as data packets travel longer distances.

- Network congestion: High network traffic can lead to congestion, causing delays in transmitting data packets.

- Routing inefficiencies: Inefficient routing paths can increase latency as data packets take longer routes to reach their destination.

- Bandwidth limitations: Limited bandwidth can result in slower data transmission speeds, leading to higher latency.

Importance of low latency in real-time applications

In real-time applications such as video conferencing, online gaming, and financial trading, low latency is crucial for ensuring smooth and responsive user experiences. High latency can result in delays, lag, and disruptions in real-time communication and interactions, impacting user satisfaction and overall performance. Therefore, minimizing network latency is essential for optimizing the performance of real-time applications and enhancing user engagement.

Types of Latency in Networks

1. Transmission Latency

Transmission latency, also known as propagation delay, refers to the time it takes for a signal to travel from the sender to the receiver in a network. This delay is influenced by the physical distance between the devices, the speed of light, and the medium through which the signal is transmitted.

- Definition and Explanation:

-

Transmission latency occurs due to the finite speed at which data can travel through a network. It is influenced by factors such as the bandwidth of the network, the congestion levels, and the routing paths taken by the data packets.

-

Impact on Real-Time Applications:

- In real-time applications such as video conferencing, online gaming, and live streaming, transmission latency can significantly impact the user experience. High transmission latency can lead to delays in communication, lag in gameplay, and buffering in streaming services. This delay can result in decreased quality, synchronization issues, and disruptions in the real-time interaction between users.

2. Processing Latency

Types of Latency in Networks

Processing latency refers to the time it takes for a device or system to handle incoming data, perform necessary computations, and generate a response. This type of latency is influenced by the efficiency of the hardware and software components involved in processing the data.

- Definition and explanation

- Processing latency occurs at various points within a network, including routers, switches, servers, and end-user devices. Each of these components contributes to the overall processing time of a network transaction.

-

Factors that can impact processing latency include the complexity of the data being processed, the workload on the processing device, and the efficiency of the algorithms used for computation.

-

Examples of processing latency in real-time applications

- In video conferencing applications, processing latency can occur when encoding and decoding video and audio streams. The time taken to compress and decompress these streams can introduce delays in the transmission of real-time data.

- Online gaming platforms experience processing latency when handling player inputs, running game logic, and synchronizing game states between multiple players. Delays in processing these actions can lead to lags and disruptions in gameplay.

- Financial trading systems rely on low processing latency to execute trades quickly and accurately. The delay in processing market data and executing buy or sell orders can result in missed opportunities or financial losses.

3. Queuing Latency

Queuing latency refers to the delay that occurs when data packets are held in a queue before being forwarded to their destination. This delay is a result of congestion within the network, leading to packets waiting in line to be processed. In real-time applications, queuing latency can have significant impacts on performance and user experience.

Explanation of queuing latency:

- When network devices, such as routers or switches, become overwhelmed with incoming data packets, they store excess packets in a queue.

- The length of time a packet spends in the queue is determined by factors such as network traffic volume, device processing capabilities, and queue management algorithms.

- Queuing latency is influenced by the size of the queue, known as the queue depth, which can be configured to prioritize certain types of traffic over others.

Effects on real-time applications:

- In real-time applications like video conferencing or online gaming, queuing latency can result in delays in data transmission.

- These delays can lead to issues such as choppy audio or video, out-of-sync gameplay, and overall poor user experience.

- To mitigate the effects of queuing latency, network administrators may implement Quality of Service (QoS) policies to prioritize real-time traffic and reduce queuing delays.

Measuring and Monitoring Latency

In real-time applications, measuring and monitoring latency is crucial for ensuring optimal performance and user experience. Various tools and methods are available to accurately assess latency levels and address any issues that may arise. It is essential to employ the right tools and techniques to measure latency effectively.

Tools for Measuring Latency

- Ping: A commonly used tool for measuring latency by sending ICMP echo requests to a target host and calculating the round-trip time.

- Traceroute: Helps identify the network path packets take to reach a destination, allowing for latency analysis at each hop.

- Network Analyzers: Provide detailed insights into network traffic, packet loss, and latency issues by capturing and analyzing data packets.

- Latency Monitors: Dedicated tools that continuously monitor latency levels in real-time applications and generate alerts for any deviations from normal values.

Methods for Measuring Latency

- End-to-End Latency Testing: Involves measuring the total time taken for a data packet to travel from the source to the destination, reflecting the overall latency in the network.

- Round-Trip Time (RTT): Calculating the time taken for a signal to travel from the sender to the receiver and back, helping assess latency in bidirectional communication.

- Packet Loss Analysis: Monitoring packet loss rates to identify potential latency issues caused by network congestion or connectivity problems.

- Continuous Monitoring: Implementing automated monitoring processes to track latency levels over time and detect any fluctuations or anomalies promptly.

By utilizing these tools and methods for measuring and monitoring latency, real-time applications can maintain optimal performance levels and deliver seamless user experiences. Regular assessment and analysis of latency metrics are essential for identifying and resolving network latency issues efficiently.

Strategies to Reduce Latency in Real-Time Applications

1. Network Optimization Techniques

Strategies to Reduce Latency in Real-Time Applications

In real-time applications, network optimization techniques play a crucial role in reducing latency and enhancing overall performance. Implementing these strategies can help in ensuring smoother data transmission and improved user experience. Two key network optimization techniques to consider are:

-

Bandwidth Management:

Bandwidth management involves efficiently allocating network resources to prioritize real-time application data transmission. By optimizing the use of available bandwidth, network congestion can be minimized, leading to reduced latency. This can be achieved through Quality of Service (QoS) mechanisms that prioritize real-time traffic over non-essential data, ensuring timely delivery of critical information. -

Data Compression Methods:

Utilizing data compression methods can significantly reduce the amount of data that needs to be transmitted over the network, thereby decreasing latency in real-time applications. By compressing data before sending it across the network, fewer network resources are required, leading to faster transmission times. Techniques such as lossless compression algorithms can be employed to minimize data size without compromising the integrity of the information being transmitted.

Implementing effective network optimization techniques like bandwidth management and data compression can help in improving the responsiveness and reliability of real-time applications, ultimately enhancing user satisfaction and overall performance.

2. Edge Computing

Edge computing refers to the practice of processing data near the edge of the network where the data is being generated, rather than relying on a centralized data-processing warehouse. This approach brings computation and data storage closer to the location where it is needed, reducing the distance data must travel and consequently decreasing latency in real-time applications.

Benefits of Edge Computing in Reducing Latency:

-

Decreased Data Travel Distance: By processing data closer to the source, edge computing significantly reduces the distance that data needs to travel over the network. This proximity leads to faster data processing and reduced latency in real-time applications.

-

Improved Response Times: Real-time applications that leverage edge computing can deliver quicker response times to user actions or requests due to the minimized latency. This is particularly crucial for applications requiring immediate interactions, such as online gaming or video conferencing.

-

Enhanced Reliability: Edge computing enhances the reliability of real-time applications by reducing the dependency on a centralized data center. In case of network congestion or failures, edge nodes can continue to process data and maintain application functionality.

Examples of Real-Time Applications Leveraging Edge Computing:

-

Autonomous Vehicles: Edge computing plays a vital role in autonomous vehicles by enabling real-time processing of sensor data for immediate decision-making at the vehicle level. This ensures rapid responses to changing road conditions without relying on a distant data center.

-

IoT Devices: Internet of Things (IoT) devices often utilize edge computing to process data locally, reducing latency for time-sensitive applications such as smart home automation or industrial monitoring. This approach enables quicker device interactions and enhances overall user experience.

-

Augmented Reality (AR) and Virtual Reality (VR): AR and VR applications benefit from edge computing to minimize latency during content delivery and interaction. By processing data closer to the user device, these applications can provide seamless, immersive experiences without noticeable delays.

3. Content Delivery Networks (CDNs)

Content Delivery Networks (CDNs) play a crucial role in reducing latency in real-time applications by strategically distributing content closer to end-users. This proximity minimizes the physical distance data needs to travel, thereby decreasing the time taken for information to reach its destination. CDNs achieve this through the following methods:

-

Caching: CDNs store cached copies of content on servers located in various geographic locations. When a user requests specific data, the CDN delivers it from the server nearest to the user, reducing latency significantly.

-

Load Balancing: By distributing traffic across multiple servers, CDNs ensure that no single server becomes overwhelmed with requests. This load balancing technique helps in maintaining optimal performance and reducing latency in real-time applications.

-

Anycast Routing: CDNs often utilize anycast routing, a networking technique that directs users to the nearest server in a group of servers. This method not only minimizes latency but also enhances reliability by rerouting traffic in case of server failures.

In the implementation of CDNs in real-time applications, developers need to carefully configure the network settings to leverage the full benefits of this technology. By strategically choosing CDN providers with a robust network infrastructure and a wide network of servers, real-time applications can achieve reduced latency, improved responsiveness, and enhanced user experience.

Challenges and Solutions in Addressing Latency Issues

Common challenges faced in minimizing latency

-

Network Congestion: One of the primary challenges in addressing latency in real-time applications is network congestion. When multiple users are simultaneously accessing the network, data packets may experience delays in transmission, leading to increased latency. This can significantly impact the performance of real-time applications such as video conferencing or online gaming.

-

Packet Loss: Another common challenge is packet loss, where data packets fail to reach their intended destination. This can be caused by network errors, hardware failures, or congestion. In real-time applications, even a small percentage of packet loss can result in delays and disruptions, affecting the overall user experience.

-

Bandwidth Limitations: Limited bandwidth can also contribute to latency issues in real-time applications. When the available bandwidth is insufficient to handle the volume of data being transmitted, delays can occur as data packets queue up for transmission. This is particularly problematic for applications that require high data throughput, such as live streaming or interactive online platforms.

Innovative solutions to overcome latency in real-time applications

-

Edge Computing: One innovative solution to reduce latency in real-time applications is edge computing. By processing data closer to the end-user, at the network edge rather than in centralized data centers, latency can be minimized. This approach helps to bypass the traditional client-server model, enabling faster response times and improved performance for real-time applications.

-

Content Delivery Networks (CDNs): CDNs are another effective solution for reducing latency in real-time applications. By caching content on servers located closer to end-users, CDNs can deliver data more quickly and efficiently. This helps to alleviate network congestion and minimize delays, especially for content-heavy applications like video streaming or online gaming.

-

Quality of Service (QoS) Optimization: Implementing QoS optimization techniques can also help mitigate latency issues in real-time applications. By prioritizing critical data packets and ensuring efficient network resource allocation, QoS mechanisms can improve the overall performance and responsiveness of real-time applications. This is particularly beneficial for applications that require consistent and reliable data transmission, such as voice over IP (VoIP) services.

In conclusion, addressing latency challenges in real-time applications requires a combination of proactive measures and innovative solutions to optimize network performance and enhance user experience. By understanding the underlying causes of latency and implementing targeted strategies, organizations can effectively mitigate delays and ensure seamless operation of their real-time applications.

Future Trends in Managing Network Latency

The future of managing network latency in real-time applications is heavily influenced by the latest technologies that are continuously evolving to enhance performance and reduce delays. As the demand for seamless real-time communication and data transfer increases, there are several key trends shaping the future of low-latency networks:

-

Edge Computing: One of the most significant trends in managing network latency is the rise of edge computing. By moving computation closer to the edge of the network, data processing and analysis can occur in proximity to where it is generated. This reduces the need to send data back and forth to centralized servers, significantly decreasing latency in real-time applications.

-

5G Technology: The rollout of 5G technology is poised to revolutionize network latency in real-time applications. With significantly higher data transfer speeds and lower latency compared to previous generations of cellular networks, 5G enables faster and more reliable communication for real-time applications like video streaming, online gaming, and teleconferencing.

-

Content Delivery Networks (CDNs): CDNs play a crucial role in managing network latency by optimizing content delivery through strategically placed servers across the globe. By caching and delivering content from servers closest to the end-users, CDNs can significantly reduce latency in real-time applications, especially for global audiences.

-

Machine Learning and AI: Advancements in machine learning and artificial intelligence are being leveraged to predict and mitigate network latency in real-time applications. By analyzing network traffic patterns, predicting potential bottlenecks, and dynamically adjusting routing paths, AI-powered solutions can optimize network performance and reduce latency for users.

In the upcoming real-time applications, predictions indicate a continued focus on minimizing network latency to provide seamless user experiences. Technologies like edge computing, 5G, CDNs, and AI will play a pivotal role in shaping the future of managing network latency and ensuring optimal performance for real-time applications.

FAQs: Understanding Network Latency in Real-Time Applications

What is network latency in real-time applications?

Network latency in real-time applications refers to the delay in data transmission between devices over a network. It is the time taken for data to travel from the sender to the receiver, and high network latency can cause delays in real-time communication and interactions.

How does network latency affect real-time applications?

Network latency can impact the performance of real-time applications by causing delays in data transmission, leading to lag or disruptions in audio, video, or other types of communication. In real-time applications such as video conferencing or online gaming, even a small amount of latency can make a noticeable difference in user experience.

What factors contribute to network latency in real-time applications?

Several factors can contribute to network latency in real-time applications, including the physical distance between devices, network congestion, the quality of network infrastructure, and the processing speed of devices. Other factors such as packet loss, jitter, or bandwidth limitations can also affect network latency.

How can network latency be reduced in real-time applications?

To reduce network latency in real-time applications, optimizing network configurations, using quality networking hardware, and implementing protocols that prioritize real-time data transmission can help. Additionally, selecting servers closer to the end-users, using content delivery networks (CDNs), and reducing unnecessary data transfers can also improve network latency.

Why is network latency important in real-time applications?

Network latency is crucial in real-time applications as any delay in data transmission can impact the user experience and the functionality of the application. Low latency is essential for smooth real-time communication, interactions, and collaboration in applications such as video conferencing, online gaming, or live streaming. Understanding and managing network latency is vital for ensuring optimal performance in real-time applications.

Overcoming network latency in real-time multiplayer – Chris Hong (CosmoUniverse)

RELATED POSTS

View all